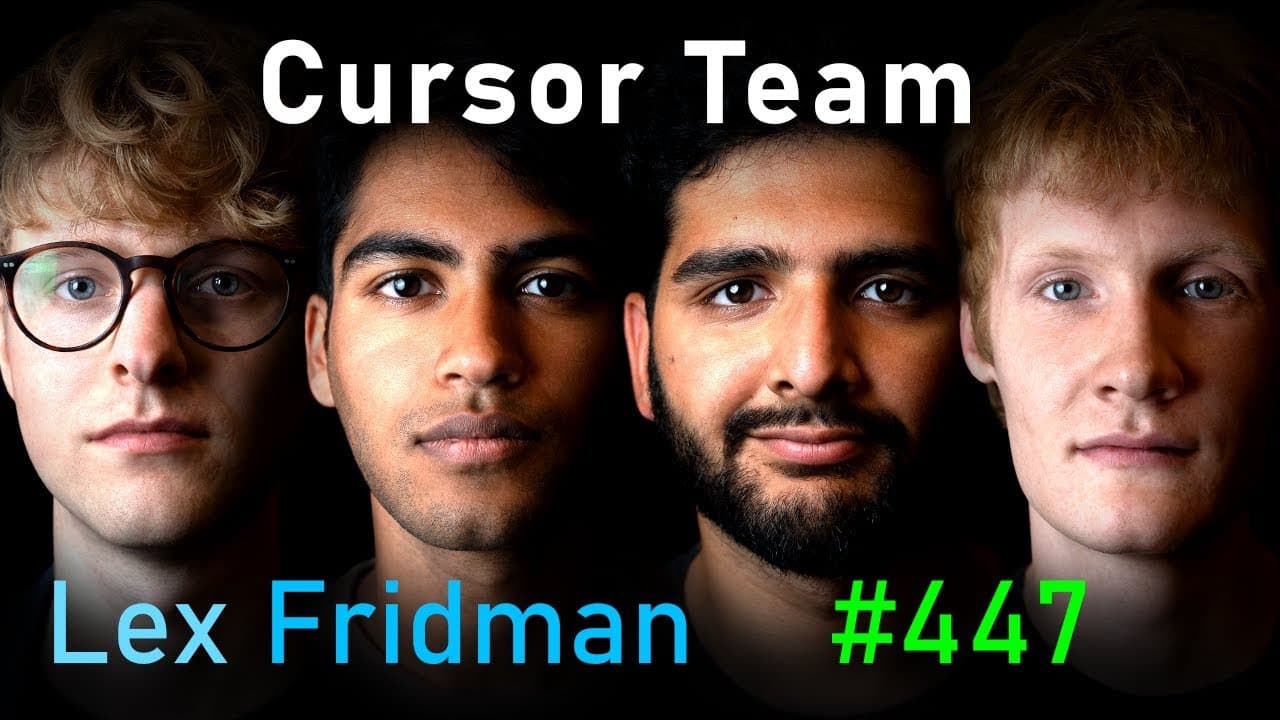

Cursor Team: Future of Programming with AI | Lex Fridman Podcast

07 Oct 2024 (7 months ago)

Introduction (0s)

- The conversation is with the founding members of the Cursor team, including Michael Truell, Swall Oif Arvid Lunark, and Aman Sanger (0s).

- Cursor is a code editor based on VS Code that adds powerful features for AI-assisted coding (8s).

- The code editor has gained significant attention and excitement from the programming and AI communities (18s).

- The conversation aims to explore the role of AI in programming, which is a broader topic than just about one code editor (22s).

- The discussion is expected to be super technical and will cover the future of programming and human-AI collaboration in designing and engineering complicated systems (27s).

- The conversation is part of the Lex Fridman Podcast, and viewers are encouraged to support the podcast by checking out the sponsors in the description (46s).

- The guests, Michael, Arvid, and Aman, are introduced at the beginning of the conversation (50s).

Code editor basics (59s)

- The primary function of a code editor is to serve as the place where software is built, and for a long time, this has meant text editing a formal programming language (1m6s).

- A code editor can be thought of as a "souped up word processor" for programmers, offering features such as visual differentiation of code tokens, navigation, and error checking due to the structured nature of code (1m22s).

- Code editors provide features that word processors cannot, such as giving visual differentiation of code tokens, navigating around the code base, and error checking to catch rudimentary bugs (1m33s).

- Traditional code editors are expected to change significantly over the next 10 years as the process of building software evolves (2m4s).

- A key aspect of a code editor is that it should be fun to use, with "fun" being closely related to speed, as fast performance is often enjoyable (2m17s).

- The speed of coding is a significant draw for many people, as it allows for rapid integration and the ability to build cool things quickly with just a computer (2m52s).

- Michael Aman and Arvid from the Cursor team emphasize the importance of code editors being enjoyable and fast, with speed being a crucial factor in making coding a fun experience (2m47s).

GitHub Copilot (3m9s)

- The team behind Cursor, a fork of VS Code, were originally Vim users before switching to VS Code due to the availability of CoPilot, a powerful autocomplete tool that suggests one to three lines of code to complete a task, providing an intimate and cool experience when it works well, but also some friction when it doesn't (3m32s).

- CoPilot was released in 2021 and was the first real AI product and language model consumer product, making it a killer app for LMS, and its beta was released in 2021 (5m24s).

- The team was drawn to VS Code because of CoPilot, and the experience of using CoPilot with VS Code was enough to convince them to switch from Vim (4m8s).

- The origin story of Cursor began around 2020 with the release of the scaling loss papers from Open AI, which predicted clear and predictable progress for the field of machine learning, making it seem like bigger models and more data would lead to better results (5m35s).

- The scaling loss papers sparked conceptual conversations about how knowledge worker fields would be improved by advancements in AI technology, and the team started thinking about building useful systems that could be used in AI (6m11s).

- The theoretical gains predicted in the scaling loss papers started to feel concrete, and it seemed like a moment where the team could build useful systems in AI without needing a PhD (6m29s).

- The team's experience with CoPilot and the predictions of the scaling loss papers led to the development of Cursor, a new editor that aims to integrate AI technology into the coding process (5m31s).

- The next big moment in AI development was getting early access to GPT-4 at the end of 2022, which felt like a significant step up in capabilities (6m55s).

- Prior to this, work was being done on tools for programmers, including those for financial professionals and static analysis with models (7m16s).

- The advancements in GPT-4 made it clear that this technology would not be a point solution, but rather a fundamental shift in how programming is done, requiring a different type of programming environment (7m48s).

- Aman, a roommate and IMO gold winner, had a bet in June 2022 that models would win a gold medal in the IMO by June or July 2024, which was initially thought to be unlikely but was later proven to be correct (8m17s).

- The results from DeepMind showed that Aman's enthusiasm for AI was justified, and he was only one point away from being technically correct (9m11s).

- Aman's interest in AI was evident even before this, as he had a scaling laws t-shirt with charts and formulas that he would wear (9m20s).

- A conversation with Michael about scaling laws led to a deeper understanding of the potential of AI and a more optimistic outlook on progress (9m30s).

- However, it's noted that progress may vary depending on the domain, with math being a particularly promising area due to the ability to verify the correctness of formal theories (10m7s).

Cursor (10m27s)

- Cursor is a fork of VS Code, one of the most popular editors, with the goal of rethinking how AI is integrated into the editing process to take advantage of its rapidly improving capabilities (10m29s).

- The decision to fork VS Code was made to avoid limitations of being a plugin to an existing coding environment and to have more control over building features (11m31s).

- The idea of creating an editor seemed self-evident to achieve the goal of changing how software is built, with expected big productivity gains and radical changes in the active building process (11m34s).

- The Cursor team believes that being ahead in AI programming capabilities, even by just a few months, makes their product much more useful, and they aim to make their current product look obsolete in a year (12m50s).

- The team thinks that Microsoft, the company behind VS Code, is not in a great position to keep innovating and pushing the boundaries of AI programming, leaving room for a startup like Cursor to rapidly implement features and push the ceiling (13m5s).

- The Cursor team focuses on capabilities for programmers rather than just features, and they aim to try out new ideas and implement the useful ones as soon as possible (13m25s).

- The team is motivated by their own frustration with the slow pace of innovation in coding experiences, despite the rapid improvement of models, and they want to create new things that take advantage of these advancements (13m58s).

- The development of AI-powered tools like Copilot and Cursor is changing the way people interact with code, with Cursor aiming to provide a more comprehensive and integrated experience by developing the user interface and the model simultaneously (14m38s).

- The Cursor team works in close proximity, often with the same person working on both the UI and the model, allowing for more experimentation and collaboration (15m19s).

- Cursor has two main features that help programmers: looking over their shoulder and predicting what they will do next, and helping them jump ahead of the AI and tell it what to do (16m2s).

- The first feature, predicting what the programmer will do next, has evolved from predicting the next character to predicting the next entire change, including the next diff and the next place to jump to (16m29s).

- The second feature, helping programmers jump ahead of the AI, involves going from instructions to code and making the editing experience ergonomic and smart (16m42s).

- Cursor's Tab feature is an example of autocomplete on steroids, providing an all-knowing and all-powerful tool that can help programmers write code more efficiently (15m42s).

- The development of Cursor is focused on making the editing experience fast, smart, and ergonomic, with a lot of work going into making the model give better answers and provide a more integrated experience (15m9s).

Cursor Tab (16m54s)

- The goal was to create a model that could edit code, which was achieved after multiple attempts, and then efforts were made to make the inference fast for a good user experience (16m55s).

- The model was incorporated with the ability to jump to different places in the code, which was inspired by the feeling that once an edit is accepted, it should be obvious where to go next (17m21s).

- The idea was to press the tab key to go to the next edit, making it a seamless experience, and the internal competition was to see how many tabs could be pressed without interruption (17m52s).

- The concept of "zero entropy" edits was introduced, where once the intent is expressed, there's no new information to finish the thought, and the model should be able to read the user's mind and fill in the predictable characters (18m12s).

- Language model loss on code is lower than on language, meaning there are many predictable tokens and characters in code, making it easier to predict the user's next actions (18m43s).

- The goal of the "gold cursor tab" is to eliminate low-entropy actions and jump the user forward in time, skipping predictable characters and actions (19m10s).

- To achieve next cursor prediction, small models need to be trained on the task, which are incredibly low-latency and pre-fill token hungry, requiring sparse models and speculative decoding (19m31s).

- The use of sparse models and a variant of speculative decoding called "speculative edits" were key breakthroughs in improving the performance and speed of the model (19m56s).

- The model uses a mixture of experts, with a huge input and small output, to achieve high-quality and fast results (20m21s).

- Caching plays a significant role in improving the performance of AI-powered programming tools by reducing latency and the load on GPUs, as re-running the model on all input tokens for every keystroke would be computationally expensive (20m31s).

- To optimize caching, the prompts used for the model should be designed to be caching-aware, and the key-value cache should be reused across requests to minimize the amount of work and compute required (20m52s).

- The near-term goals for AI-powered programming tools include generating code, filling empty space, editing code across multiple lines, jumping to different locations inside the same file, and jumping to different files (21m6s).

- The tools should also be able to predict the next action, such as suggesting commands in the terminal based on the code written, and providing the necessary information to verify the correctness of the suggestions (21m36s).

- Additionally, the tools should be able to provide the human programmer with the necessary knowledge to accept the next completion, such as taking them to the definition of a function or variable (22m2s).

- Integrating AI-powered programming tools with other systems, such as SSH, to provide a seamless experience for the programmer is also a potential goal (22m16s).

- The ultimate goal is to create a system where the next 5 minutes of programming can be predicted and automated, allowing the programmer to disengage and let the system take over, or to see the next steps and tap through them (22m42s).

- The system should be able to learn from the programmer's recent actions and adapt to their workflow, making it a useful tool for improving productivity (22m51s).

Code diff (23m8s)

- The Cursor team is working on a diff interface that allows users to review and accept changes suggested by the AI model, with different types of diffs for autocomplete, reviewing larger blocks of code, and multiple files (23m12s).

- The team has optimized the diff for autocomplete to be fast and easy to read, with a focus on minimizing distractions and allowing users to focus on a specific area of the code (23m59s).

- The current interface has a box on the side that shows the changes, but the team has experimented with different approaches, including blue crossed-out lines, red highlighting, and a Mac-specific option button that highlights regions of code (24m17s).

- The option button approach was intended to provide a hint that the AI had a suggestion, but it was not intuitive and required users to know to hold the button to see the suggestion (25m33s).

- The team recognizes that the current diff interface is not perfect and is working on improvements, particularly for large edits or multiple files, where reviewing diffs can be prohibitive (26m15s).

- One idea being explored is to prioritize parts of the diff that are most important and have the most information, while minimizing or hiding less important changes (26m36s).

- The idea of using AI to improve the programming experience, particularly in code review, is exciting, as it can help guide human programmers through the code and highlight important pieces, potentially using models that analyze diffs and mark likely bugs with red squiggly lines (26m46s).

- The goal is to optimize the experience, making it easier for humans to review code, and using intelligent models to do so, as current diff algorithms are not intelligent and do not care about the content (27m22s).

- As models get smarter, they will be able to propose bigger changes, requiring more verification work from humans, making it harder and more time-consuming (27m51s).

- Code review across multiple files is a challenging task, and GitHub tries to solve this, but it can be improved using language models, which can point reviewers towards the regions that matter (28m13s).

- Using language models can significantly improve the review experience, especially when the code is produced by these models, as the review experience can be designed around the reviewer's needs (28m50s).

- The code review experience can be redesigned to make the reviewer's job more fun, easy, and productive, rather than trying to make it look like traditional code review (29m21s).

- One idea is to use models to guide reviewers through the code, ordering the files in a logical way, so they can understand the code more easily (29m42s).

- The goal is not necessarily to make programming entirely natural language-based, as sometimes programming requires working directly with code, and natural language may not be the most efficient way to do so (30m6s).

- Implementing functions and explaining desired outcomes to others, such as Swalla, can be frustrating, and sometimes it's easier to take over the keyboard and show examples to communicate effectively (30m30s).

- Similarly, when working with AI, showing examples can be the easiest way to communicate and achieve the desired outcome, as it allows the AI to understand and apply the concept elsewhere (30m48s).

- In certain situations, such as designing a website, it's more effective to demonstrate the desired outcome by dragging or drawing elements rather than providing verbal instructions (30m54s).

- Future advancements may include brain-machine interfaces, enabling AI to understand human thoughts and intentions (31m7s).

- Natural language will still have a place in programming, but it's unlikely to be the primary method used by most people most of the time (31m13s).

ML details (31m20s)

- Recursor works via an ensemble of custom models that have been trained alongside frontier models, which are fantastic at reasoning-intensive tasks, and these custom models can specialize in specific areas to be even better than frontier models (31m32s).

- The custom models are necessary for tasks such as applying changes to code, as frontier models can struggle with creating diffs, especially in large files, and often mess up simple things like counting line numbers (32m8s).

- To alleviate this issue, Recursor lets the model sketch out a rough code block indicating the change, and then trains a model to apply that change to the file, with the "apply" model looking at the code and providing a good suggestion for what new changes to make (32m36s).

- The process of combining the model's suggestions with the actual code changes is not a deterministic algorithm and can be challenging, with shallow copies of the "apply" model often breaking and resulting in a poor product experience (32m57s).

- The "apply" model allows for the use of fewer tokens with more intelligent models, which can be expensive in terms of latency and cost, and enables the use of smaller models to implement the sketched-out code (33m29s).

- This approach can continue with the use of smarter models for planning and less intelligent models for implementation details, potentially with even more capable models given a higher-level plan (33m56s).

- Making the model fast involves speculative edits, a variant of speculative decoding, which takes advantage of the fact that processing multiple tokens at once can be faster than generating one token at a time when memory-bound in language model generation (34m26s).

- Instead of using a small model to predict draft tokens for a larger model to verify, code edits utilize a strong prior of what the existing code will look like, which is the exact same code, to feed chunks of the original code back into the model, allowing it to process lines in parallel and reach a point of disagreement where the model predicts different text. (35m10s)

- This process results in a much faster version of normal editing code, where the model rewrites all the code, and can use the same interface as diffs, but streams down a lot faster, allowing for wireless streaming and reviewing of code before it's done. (36m3s)

- The human can start reading the code before it's done, eliminating the need for a big loading screen, which is part of the advantage of this process. (36m34s)

- Speculation is a fairly common idea, not only in language models, but also in CPUs, databases, and other areas, making it an interesting concept to explore. (36m40s)

GPT vs Claude (36m54s)

- There is no single model that dominates others in all categories of programming, including speed, ability to edit code, process lots of code, and long context, among others (36m55s).

- Sonnet is considered the best model for programming tasks, as it is good at reasoning and can perform well on hard programming interview-style problems, but it may not always understand the user's intent (37m34s).

- Other Frontier models, such as Claude and GPT, may perform well in benchmarks but may not generalize as well to real-world programming tasks, which can be messy and less well-specified (38m0s).

- Benchmarks may not accurately represent real-world programming experiences, as they are often well-specified and abstract, whereas real-world programming involves understanding human context and intent (39m34s).

- The difference between benchmarks and real-world programming is a critical detail when evaluating models, as it can be difficult to encapsulate the complexity of real-world programming in a benchmark (38m47s).

- Public benchmarks can be problematic, as they may be overused and contaminated with training data, making it difficult to get accurate results from models (40m21s).

- Real-world programming involves a lot of context-dependent tasks, such as understanding human language and intent, which can be challenging for models to replicate (39m25s).

- The benchmark problem in AI programming does not provide the context of a codebase, allowing models to "hallucinate" the right file, function names, and other details, which can be misleading in evaluating their performance (40m41s).

- The public aspect of these benchmarks is tricky, as models may be trained on literal issues or pool requests, but not on the actual training data of the repository itself, which can lead to inaccurate evaluation scores (40m51s).

- Popular Python repositories, such as Simpai, are unlikely to be used as training data for models in order to get true evaluation scores in benchmarks (41m10s).

- To evaluate the performance of AI models, some companies use humans to play with the models and provide qualitative feedback, while others use private evaluations and internal assessments (41m36s).

- The "Vibe Benchmark" or "human Benchmark" involves pulling in humans to do a "vibe check" and assess the model's performance qualitatively (41m54s).

- Online forums, Reddit, and X can be used to gather people's opinions on AI models, but it's challenging to properly load in these opinions and determine whether the model's performance has actually degraded or if it's just a subjective feeling (42m6s).

- The performance of AI models can be affected by various factors, including the type of chips used, numerics, and quantization, which can lead to differences in performance between different models and environments (42m34s).

- Bugs can be a significant issue in AI models, and it's hard to avoid them, especially when dealing with complex systems and large amounts of data (43m21s).

Prompt engineering (43m28s)

- To maximize success when working with AI models, the importance of human input lies in designing effective prompts, which can vary depending on the model being used, as different models respond differently to different prompts (43m30s).

- Early models, such as GP4, were sensitive to prompts and had small context windows, requiring careful selection of relevant information to include in the prompt (44m1s).

- The challenge of deciding what to include in a prompt is complicated by limited space, even with today's models, which can lead to slower performance and confusion (44m27s).

- A system called "preum" was developed to help with this issue, particularly when working with 8,000 token context windows (44m41s).

- The approach to designing prompts can be compared to designing websites, where dynamic information needs to be formatted to work with varying inputs (45m1s).

- The declarative approach used in React and JSX has been found to be helpful in designing prompts, allowing for the declaration of priorities and the use of a rendering engine to fit the information into the prompt (45m36s).

- This approach has been adapted for use in prompt design, using a pre-renderer to fit the information into the prompt, and has been found to be helpful in debugging and improving prompts (46m1s).

- The system uses JSX-like components, such as a file component, to prioritize and format the information in the prompt (46m45s).

- The components allow for the assignment of priorities to different lines of code, with the line containing the cursor typically being the most important (46m52s).

- The goal is to create a system where humans can ask questions in a natural way, and the system figures out how to retrieve relevant information to make sense of the query (47m47s).

- The idea is to let humans be as lazy as they want, but also to provide more information and be prompted to be articulate in terms of the depth of thoughts conveyed (48m21s).

- There is a tension between being lazy and providing more information, and the system should inspire humans to be more articulate (48m27s).

- Even with a perfect system, there may not be enough intent conveyed to know what to do, and there are ways to resolve this, such as having the model ask for clarification or showing multiple possible generations (48m42s).

- The model can suggest files to add while typing, and guess the uncertainty based on previous commits, to help resolve ambiguity (49m42s).

- The system can also suggest editing multiple files at once, such as when making an API, to edit the client and server that use the API (50m31s).

- There are two phases to consider: writing the prompt and resolving uncertainty before clicking enter, and the system can help resolve some of the uncertainty during these phases (50m48s).

- Arvin of Perplexity's idea is to let humans be as lazy as they want, but the system should also encourage humans to provide more information (48m0s).

AI agents (50m54s)

- Agents are considered cool and resemble humans, making them seem closer to achieving Artificial General Intelligence (AGI), but they are not yet super useful for many things, although they have the potential to be useful in the future (50m59s).

- Agents could be useful for tasks that are well-specified, such as fixing bugs, and could potentially automate the process of finding the right files, reproducing the bug, fixing it, and verifying the correction (51m53s).

- Agents may not take over all of programming, as a lot of programming value lies in iterating and refining initial versions, and agents may not be suitable for tasks that require instant feedback and quick iteration (52m21s).

- A system that provides instant initial versions and allows for quick iteration may be more desirable for certain types of programming (52m44s).

- Agents could potentially be used to set up development environments, install software packages, configure databases, and deploy apps, making the programmer's life easier and more fun (52m53s).

- Cursor is not actively working on agent-based development environment setup, but it is within their scope and aligns with their goal of making programming easier and more enjoyable (53m14s).

- Agents could be used in the background to assist with tasks such as working on backend code while the programmer focuses on frontend code, and providing initial pieces of code to iterate on (53m37s).

- Speed is an essential aspect of Cursor, and most aspects of the platform feel fast, although the "apply" feature is currently the slowest part and is being worked on (54m11s).

- The goal is to make models, such as chat and diff models, fast, with a response time of around 1-2 seconds, which feels slow compared to other fast processes (54m30s).

- One strategy to achieve this is by using Cash Waring, where the model predicts and warms the cache with the current file contents as the user starts typing, significantly lowering the time to first response (54m52s).

- This approach reuses the KV cache results, reducing latency and cost, and allows the model to prefill and compute only a few tokens before starting generation (55m6s).

- KV cache works by storing the keys and values of previous tokens in the prompt, allowing the model to reuse them instead of doing a forward pass through the entire model for every single token (55m31s).

- This approach reduces the number of matrix multiplies, making the model faster, and can be further improved by storing the keys and values for the last N tokens and reusing them to compute the output token for the N+1 token (56m22s).

- Higher-level caching, such as caching prompts or speculative caching, can also be used to improve performance, for example, by predicting ahead and triggering another request as if the user would have accepted the suggestion (57m1s).

- This approach combines speculation and caching, allowing the model to feel fast despite no actual changes in the model, and can be further improved by making the KV cache smaller, enabling more speculation and prediction (57m43s).

- By predicting multiple possible next steps, the model can increase the chances of the user hitting one of the predicted options, making the interaction feel faster and more responsive (57m55s).

- The general phenomenon in AI models is that a single sample from the model may not be very good, but predicting multiple things can increase the probability of one of them being correct, which is useful for reinforcement learning (RL) (58m15s).

- RL can exploit this phenomenon by making multiple predictions and rewarding the ones that humans prefer, allowing the model to learn and output suggestions that humans like more (59m3s).

- The model has internal uncertainty over which of the key things is correct or what humans want, and RL can help predict which of the model's suggestions are more amendable to humans (59m0s).

- Techniques like multiquery attention and group query attention can reduce the size of the key-value (KV) cache, making it faster to generate tokens and improving speed (1h0m17s).

- Multiquery attention is the most aggressive of these techniques, preserving only the query heads and getting rid of all the key-value heads, while group query preserves all the query heads and reduces the number of key-value heads (1h1m16s).

- Reducing the size of the KV cache can improve memory bandwidth and make token generation faster, and techniques like compressing the size of keys and values can also help (1h1m8s).

- Other techniques, such as MLA (multi-latent), can also be used to improve speed and efficiency in AI models (1h2m1s).

- The MLA algorithm from Deepseek is an interesting approach that reduces the number of key-value (KV) heads, resulting in a smaller size but potentially losing the advantage of having multiple different keys and values. (1h2m4s)

- To address this, a method is proposed where a single big shared vector is kept for all keys and values, and smaller vectors are used for each token, allowing for a low-rank reduction that can be expanded back out when needed. (1h2m54s)

- This approach is more efficient because it reduces the size of the vector being kept, such as from 32, and allows for more compute to be used for other tasks. (1h3m31s)

- Having a separate set of keys and values and query can be richer than compressing them into one, and this interaction is important when dealing with memory-bound systems. (1h3m42s)

- The proposed method can improve the user experience by allowing for a larger cache, which can lead to more cache hits and reduce the time to the first token. (1h4m1s)

- Additionally, the method enables faster inference with larger batch sizes and larger prompts without significant slowdown, and allows for increasing the batch size or prompt size without degrading latency. (1h4m22s)

Running code in background (1h4m51s)

- The goal is to have a lot of background processes happening, such as the Shadow workspace iterating on code, to help the user at a slightly longer time horizon than just predicting the next few lines of code (1h4m53s).

- The idea is to spend computation in the background to help the user, for example, by figuring out what they will make in the next 10 minutes and providing feedback signals to the model (1h5m23s).

- The Shadow workspace is a separate window of Cursor that is hidden, allowing AI agents to modify code and get feedback from language servers without affecting the user (1h7m36s).

- The language server protocol is used in Cursor to show information to the programmer, such as type checking, going to definition, and seeing the right types, and this information is also used by AI models in the background (1h7m15s).

- The language server is an extension developed by language developers, such as the TypeScript language server, and it interfaces with VS Code through the language server protocol (1h6m31s).

- The Shadow workspace allows AI agents to run code in the background, get feedback from language servers, and iterate on their code without saving it, as long as they don't save it to the same folder (1h7m51s).

- The goal is to make the Shadow workspace mirror the user's environment exactly, and on Linux, this can be achieved by mirroring the file system and having the AI make changes to the files in memory (1h8m21s).

- On Mac and Windows, it's more difficult to achieve this, but one possible solution is to hold a lock on saving, allowing the language model to hold the lock on saving to disk and operating on the Shadow workspace instead of the ground truth version of the files (1h8m57s).

- This approach allows the user to code in the Shadow workspace, get lint errors, and run code, but with a warning that there's a lock, and the user can take back the lock from the language server or Shadow workspace if needed (1h9m17s).

Debugging (1h9m31s)

- Allowing a model to change files can be a bit scary, but it's also cool to let the agent do a set of tasks and come back the next day to observe its work, similar to having a colleague (1h9m31s).

- There may be different versions of runability, where simple tasks can be done locally on the user's machine, but more aggressive tasks that make larger changes over longer periods of time may require a remote sandbox environment (1h9m52s).

- Reproducing the user's environment in a remote sandbox is a tricky problem, as it needs to be effectively equivalent for running code (1h10m14s).

- The desired agents for coding can perform tasks such as finding bugs, implementing new features, and automating tasks that don't have to do directly with editing (1h10m26s).

- Agents can also be used for tasks like video editing, translation, and overdubbing, and can interact with software like Adobe Premiere using code (1h10m42s).

- In terms of coding, agents can be used for bug finding, including logical bugs and big directions of implementation (1h11m16s).

- Current models are not good at bug finding, even when naively prompted, and are poorly calibrated, which may be due to the pre-training distribution and the loss not being low enough for full generalization in code (1h11m40s).

- Frontier models are good at code generation and question answering, but struggle with tasks like bug detection, which don't have many examples online (1h12m16s).

- Transferring the model to tasks like bug detection is a challenge, but it's possible to achieve fantastic transfer from pre-trained models on code in general (1h13m6s).

- Generalized models are very good at coding and bug detection, but they require a little nudging in that direction to understand what is being asked of them (1h13m15s).

- These models understand code well during pre-training, building a representation that likely includes knowledge of potential issues or "sketchy" code (1h13m26s).

- However, the models struggle to elicit this knowledge and apply it to identify important bugs, as they lack human calibration on which bugs are critical (1h13m51s).

- Human calibration is essential in determining the severity of bugs, as it's not just about identifying "sketchy" code, but also understanding the potential impact, such as taking down a server (1h13m58s).

- Staff engineers are valuable because they possess cultural knowledge of past experiences, such as knowing that a particular piece of code caused issues in the past (1h14m7s).

- The models need to understand the context of the code being written, such as whether it's for an experiment or production, to provide relevant feedback on bugs (1h14m22s).

- In production environments, such as writing code for databases or operating systems, it's unacceptable to have even edge-case bugs, requiring a high level of paranoia and calibration (1h14m38s).

- Even with maximum paranoia settings, the models still struggle to fully understand the context and provide accurate feedback on bugs (1h14m51s).

Dangerous code (1h14m58s)

- Writing code that can cause significant damage should be accompanied by clear comments warning of potential risks, with suggestions to include warnings in all caps for emphasis, which can also help AI models identify critical areas of the code (1h14m58s).

- This practice is beneficial for both humans and AI models, as it highlights potential dangers and encourages more careful consideration, even if some people find it aesthetically unappealing (1h16m10s).

- Humans tend to forget important details and make small mistakes that can have significant consequences, making it essential to clearly label code and provide warnings (1h16m25s).

- Traditional docstrings may not be enough, as people often skim them when making changes, and explicit warnings can help prevent mistakes (1h16m42s).

- The future of programming may involve AI models suggesting specifications for functions, which humans can review, and smart reasoning models computing proofs to verify that the implementation follows the spec (1h17m25s).

- This approach could potentially reduce the need for writing tests, but it also raises questions about the difficulty of specifying intent and the challenges of formal verification (1h17m43s).

- Even with a formal spec, there may be aspects of the code that are difficult to specify or prove, and the spec itself may need to be written in a formal language that is not easily understood by humans (1h18m15s).

- Formal verification of entire code bases is a desired goal, but it's harder to achieve compared to single functions, and it requires decomposing the system into smaller parts and verifying each one (1h18m58s).

- Recent work has shown that it's possible to formally verify code down to the hardware level, which could be applied to large code bases (1h19m7s).

- The specification problem is a real challenge in formal verification, particularly when dealing with side effects, external dependencies, and language models (1h19m36s).

- To address these challenges, it might be necessary to develop new specification languages that can capture the complexities of modern code (1h18m45s).

- Language models could potentially be used as primitives in programs, but this raises questions about how to verify their behavior and ensure they are aligned with the desired outcome (1h19m57s).

- Proving that a language model is aligned or gives the correct answer is a desirable goal, which could help with AI safety and bug finding (1h20m9s).

- The initial hope is that AI models can help with bug finding by catching simple bugs, such as off-by-one errors, and eventually more complex bugs (1h20m51s).

- Good bug-finding models are necessary to enable AI to do more programming, as they need to be able to verify and check the code generated by AI (1h21m20s).

- Training a bug model is a challenging task, and there have been contentious discussions about the best approach (1h21m54s).

- One popular idea is to train a model to introduce bugs in existing code, then train a reverse bug model to find bugs using synthetic data, as it's often easier to introduce bugs than to find them (1h21m57s).

- Another approach is to give models access to a lot of information beyond just the code, such as running the code and seeing traces and step-through debuggers, to help find bugs (1h22m25s).

- There could be two different product form factors: a specialty model that runs in the background to spot bugs, and a more powerful model that can be used to solve specific problems with a lot of compute power (1h22m47s).

- Integrating money into the system could be a way to incentivize users to find and report bugs, with features like bug bounties or paying for code that is particularly useful (1h23m10s).

- The idea of integrating money is controversial, with concerns about people copying and pasting code to get paid, and the potential for the product to feel less fun when money is involved (1h24m30s).

- One possible implementation of this idea is to have users pay a small amount of money to accept a bug that has been found, with the amount displayed in parentheses, such as $1 (1h24m53s).

- The use of AI-generated code has already shown promise, with examples of perfect functions being generated for interacting with APIs, and users being willing to pay for high-quality code (1h23m24s).

- The concept of a "tip" button has been suggested, where users can pay a small amount of money to support the company and show appreciation for good code (1h23m57s).

- A potential business model for AI-powered programming tools could involve separating the code from the tool and charging a monthly fee for access to the tool, with an optional tipping component for users who want to support the developers of the code (1h25m19s).

- The tipping component could be introduced when users share examples or code with others, as this is often when people want to show appreciation for the work of others (1h25m40s).

- An alternative solution to the honor system for bounty systems could be a technical solution that verifies when a bug has been fixed, eliminating the need for users to rely on the honor system (1h26m0s).

- This technical solution could involve advanced error checking and code verification, potentially using Language Server Protocol (LSP) and other technologies to ensure the accuracy of the code (1h25m56s).

- The goal of this technical solution would be to create a system where users can trust that the code has been fixed without relying on the honor system, making the bounty system more reliable and efficient (1h26m4s).

Branching file systems (1h26m9s)

- There's a gap between the terminal and the code, and it would be beneficial to have a loop that runs the code, suggests changes, and provides error messages if the code and runtime give an error (1h26m10s).

- Currently, there are separate worlds for writing code and running it, but having a looping system could make sense, with the question of whether it happens in the foreground or background (1h26m28s).

- A database side to this concept is being developed, with the ability to add branches to a database, allowing for testing against a production database without modifying it (1h26m56s).

- This feature is being developed by companies like PlanetScale, and it involves adding a branch to the write-ahead log, which comes with technical complexity (1h27m14s).

- Database companies are working on adding new features, such as branching, to their products, and AI agents may use this feature to test against different branches (1h27m47s).

- The idea of branching is not limited to databases, and it would be interesting to have branching file systems, which could be a requirement for databases to support (1h28m9s).

- The concept of branching everything raises concerns about space and CPU usage, but there are algorithms to mitigate these issues (1h28m21s).

- The company mostly uses AWS, which is chosen for its reliability and trustworthiness, despite having a complex setup process and interface (1h28m33s).

- AWS is considered to be "really really good" and can always be trusted to work, with any problems likely being the user's fault (1h29m10s).

Scaling challenges (1h29m20s)

- The process of scaling a startup to accommodate a large number of users can be challenging, with each additional zero to the request per second introducing new issues with general components such as caching and databases (1h29m21s).

- At a certain scale, systems can experience issues such as integer overflows on tables, and custom systems like the Ral system for computing a semantic index of a codebase can be particularly tricky to scale (1h29m45s).

- Predicting where systems will break when scaling them can be difficult, even for senior engineers, and there is often something unexpected that happens when adding an extra layer of complexity (1h30m7s).

- The Ral system involves uploading code, chunking it up, and sending it for embedding, then storing the embeddings in a database without storing the actual code (1h30m31s).

- To ensure the local index and server state are the same, a hash is kept for every file and folder, with the folder hash being the hash of its children, and this is done recursively until the top (1h31m22s).

- This approach allows for efficient reconciliation of the local and server state, avoiding the need to download hashes from the server every minute and reducing network overhead (1h31m31s).

- The alternative approach of downloading hashes from the server every minute would introduce significant network overhead and database overhead, making it impractical (1h31m56s).

- The current approach only reconciles the single hash at the root of the project, and if there is a mismatch, it recursively checks the children to find the disagreement, reducing the overhead (1h32m30s).

- The Merkel tree is a data structure used for reconciliation, allowing for efficient scaling of code bases across multiple programmers, which is a difficult problem to solve, especially for large companies with enormous numbers of files (1h32m55s).

- The main challenge in scaling the current solution is coming up with new ideas and implementing them, as well as dealing with the complexities of indexing systems, such as embedding code and managing branches (1h33m40s).

- The bottleneck in terms of costs is not storing data in the vector database, but rather embedding the code, which can be optimized by using a clever trick involving caching vectors computed from the hash of a given chunk (1h33m58s).

- This optimization allows for fast access to code bases without storing any code data on servers, only storing vectors in the vector database and vector cache (1h34m39s).

- The biggest gain from indexing the code base is the ability to quickly find specific parts of the code, even with a fuzzy memory of what to search for, by asking questions to a chat that can retrieve relevant information (1h35m4s).

- The usefulness of this feature is expected to increase over time as the quality of retrieval is improved, with a high ceiling for potential growth (1h35m40s).

- Local implementation of the technology has not been prioritized, as the current focus is on cloud-based solutions that can handle large code bases and multiple programmers, despite the added complexity of caching and puzzle-solving required (1h35m51s).

- Most software performs heavy computational tasks locally, but doing embeddings locally is challenging and may only work on the latest computers, with over 80% of users having less powerful Windows machines (1h36m10s).

- Local models are not feasible for big companies with large codebases, even with the most powerful MacBook Pros, as processing big codebases is difficult and would result in a poor user experience (1h37m11s).

- Massive codebases would consume a lot of memory and CPU, making local models impractical, and approximate nearest neighbors would be affected (1h37m41s).

- The trend towards MoEs (Mixture of Experts) models may favor local models in terms of memory bandwidth, but these models are larger and require more resources, making them difficult to run locally (1h37m55s).

- For coding, users often want the most intelligent and capable models, which are hard to run locally, and people may not be satisfied with inferior models (1h38m31s).

- Some people prefer to do things locally, and there is an open-source movement that resists the growing power centers, but an alternative to local models is homomorphic encryption for language model inference, which is still in the research stage (1h39m8s).

- Homomorphic encryption allows users to encrypt their input locally, send it to a server for processing with powerful models, and receive the answer without the server being able to see the data (1h39m27s).

- Homomorphic encryption is being researched to make the overhead lower, as it has the potential to be really impactful in the future, allowing for privacy-preserving machine learning (1h39m49s).

- As AI models become more economically useful, more of the world's information and data will flow through one or two centralized actors, raising concerns about traditional hacker attempts and surveillance (1h40m15s).

- The centralization of information flow creates a slippery slope where surveillance code can be added, leading to potential misuse of data (1h40m53s).

- Solving homomorphic encryption for privacy-preserving machine learning is a challenge, but it's essential to address the downsides of relying on cloud services (1h41m8s).

- The current reliance on cloud services has downsides, including the control of data by a small set of companies, which can be infiltrated in various ways (1h41m34s).

- The world is moving towards a place where powerful models will require monitoring of all prompts for security reasons, but this raises concerns about centralization and trust (1h42m5s).

- The centralization of information flow through a few model providers is a concern, as it's a fine line between preventing models from going rogue and trusting humans with the world's information (1h42m27s).

- The difference between cloud providers and model providers is that the latter often requires personal data that would never have been put online, centralizing control and making it difficult to use personal encryption keys (1h42m48s).

Context (1h43m32s)

- Writing code in Python can be challenging due to the need to import various libraries and define variables, making it difficult for AI models to automatically determine the context (1h43m34s).

- Computing context automatically is a complex task, and there are trade-offs to consider, such as the model's speed and expense, as well as the potential for confusion with too much information in the prompt (1h43m50s).

- Including automatic context in AI models can make them slower and more expensive, and may also lead to decreased accuracy and relevance if the context is not carefully curated (1h44m0s).

- The bar for accuracy and relevance of included context should be high, and there are ongoing efforts to improve automatic context in AI models, including the development of better retrieval systems and learning models (1h44m23s).

- Researchers are exploring ways to enable language models to understand new corpora of information and make the context window infinite, allowing the model to pay attention to the infinite context and potentially leading to a qualitatively different type of understanding (1h44m54s).

- Ideas being tried to achieve this include caching for infinite context, fine-tuning models to learn information and weights, and learning knowledge directly in the weights of the model (1h45m15s).

- A proof of concept for learning knowledge directly in the weights is a vs code fork, where models have been fine-tuned to answer questions about code and have shown promising results (1h45m55s).

- The question of whether to train a model to understand a specific code base or to have the model do everything end-to-end is an open research question, with uncertainty around the best approach (1h46m35s).

- Separating the retrieval from the Frontier Model is a potential approach, where a capable model is used for retrieval and a good open source model is trained to be the retriever, feeding context to the larger models (1h46m53s).

- Post-training a model to understand a code base involves trying various methods, such as replicating what's done with VSCode and Frontier models, and using a combination of general code data and data from a particular repository (1h47m16s).

- One approach to post-training is to use instruction fine-tuning with a normal instruction fine-tuning dataset about code, and then adding questions about code in the repository, which can be obtained through ground truth or synthetic data (1h47m55s).

- Synthetic data can be generated by having the model ask questions about various pieces of the code, or by prompting the model to propose a question for a piece of code, and then adding those as instruction fine-tuning data points (1h48m10s).

- This approach might unlock the model's ability to answer questions about the code base, by adding the synthetic data points to the instruction fine-tuning dataset (1h48m32s).

OpenAI o1 (1h48m39s)

- The role of test time compute systems in programming is seen as really interesting, as it allows for increasing the number of inference time flops used while still getting corresponding improvements in model performance (1h48m46s).

- Traditionally, training a bigger model that always uses more flops was necessary, but now it's possible to use the same size model and run it for longer to get an answer at the quality of a much larger model (1h49m33s).

- There are some problems that may require a 100 trillion parameter model, but that's only a small percentage of all queries, making it wasteful to spend effort training a model that costs that much and then running it so infrequently (1h49m50s).

- Figuring out which problem requires what level of intelligence is an open research problem, and dynamically determining when to use different models is a challenge that hasn't been fully solved (1h50m27s).

- The "model routing problem" involves determining when to use different models, such as GPT-4 or a small model, and it's unclear what level of intelligence is needed to determine if a problem is too hard for a particular model (1h50m42s).

- There are three stages in the process: pre-training, post-training, and test time compute, with test time compute requiring a whole training strategy to work and being not well understood outside of big labs (1h51m20s).

- The biggest gains are unclear, but test time compute may eventually dwarf pre-training in terms of compute spent, and it's possible that the compute spent on getting test time compute to work for a model will be significant (1h52m7s).

- The exact methods used by big labs, such as OpenAI, to implement test time compute are not well understood, and there are some interesting papers that provide hints but no clear answers (1h51m40s).

- To build a competing model, one approach would be to train a process reward model, which grades the chain of thought rather than just the final outcome, allowing for more nuanced evaluation of the model's reasoning. (1h52m35s)

- Outcome reward models, on the other hand, are traditional reward models that look at the final result and assign a grade based on its accuracy, which is commonly used in language modeling. (1h52m45s)

- Process reward models have been explored in preliminary papers, such as one by Open AI, which used human labelers to create a large dataset of chains of thought, but more research is needed to effectively utilize these models. (1h53m9s)

- One potential application of process reward models is tree search, where the model can explore multiple paths of thought and evaluate the quality of each branch, allowing for more efficient and effective problem-solving. (1h53m56s)

- However, training process reward models in a more automated way is still an open challenge, and more research is needed to develop effective methods for doing so. (1h54m35s)

- Open AI has chosen to hide the chain of thought from users, instead asking the model to summarize its reasoning, and monitoring the chain of thought to prevent manipulation, which raises questions about the potential risks and benefits of this approach. (1h54m54s)

- One possible reason for hiding the chain of thought is to make it harder for others to replicate the technology, as having access to the chain of thought could provide valuable data for training competing models. (1h55m22s)

- APIs were initially used to offer easy access to log probabilities for tokens, but some of these APIs were taken away, possibly to prevent users from distilling capabilities out of the models into their own control (1h55m50s).

- The integration of model 01 in Cursor is still experimental, and it is not part of the default experience, as the team is still learning how to use the model effectively (1h56m22s).

- The model 01 has significant limitations, such as not being able to stream, making it painful to use for tasks that require supervising the output, and it feels like the early stages of test-time computing (1h57m28s).

- The development of AI models is an ongoing process, with two main threads: increasing pre-training data and model size, and improving search capabilities (1h58m7s).

- GitHub Co-Pilot might be integrating model 01, but this does not mean that Cursor is done, as the space is constantly evolving, and the best product in the future will be much more useful than the current one (1h58m15s).

- The key to success in this space is continuous innovation, and startups have an opportunity to win by building something better, even against established players with many users (1h59m11s).

- The focus should be on building the best product and system over the next few years, rather than worrying about competition or market share (1h59m24s).

- The additional value of Cursor comes from both the modeling engine side and the editing experience, offering more than just integrating new models quickly. (1h59m30s)

- The depth that goes into custom models, which work in every facet of the product, contributes to the value of Cursor. (1h59m47s)

- The product also features thoughtful user experience (UX) design with every single feature. (1h59m55s)

Synthetic data (2h0m1s)

- Synthetic data can be categorized into three main types: distillation, where a language model outputs tokens or probability distributions over tokens to train a less capable model; one-directional problems, where introducing bugs or errors is easier than detecting them, and a model can be trained to detect bugs using synthetic data; and producing texts with language models that can be verified easily, such as using a verification system to detect Shakespeare-level language or formal language in math (2h0m9s).

- Distillation is useful for eliciting capabilities from expensive, high-latency models and distilling them down into smaller, task-specific models, but it won't produce a more capable model than the original one (2h0m50s).

- One-directional problems, such as bug detection, can be addressed by introducing reasonable-looking bugs into code using a model that's not highly trained or smart, and then training a model to detect those bugs using synthetic data (2h1m11s).

- Producing texts with language models that can be verified easily is a category of synthetic data that big labs are focusing on, and it involves generating texts that can be verified using a verification system, such as detecting Shakespeare-level language or formal language in math (2h1m47s).

- Verification is crucial in this category, and it's best when it's done using tests, formal systems, or manual quality control, rather than relying on language models to verify the output (2h3m14s).

- This category of synthetic data has the potential to result in massive gains, but it can be challenging to get it to work in all domains or with open-ended tasks (2h3m42s).

RLHF vs RLAIF (2h3m48s)

- RLHF (Reinforcement Learning from Human Feedback) is a method where the reward model is trained from labels collected from humans giving feedback, which can be effective if a large amount of human feedback is available for a specific task (2h3m59s).

- RLAIF (Reinforcement Learning from AI Feedback) is an alternative approach that relies on the constraint that verification is easier than generation, using a language model to verify and improve its own outputs (2h4m19s).

- RLAIF may work if the language model has an easier time verifying solutions than generating them, potentially allowing for recursive improvement (2h4m43s).

- A hybrid approach can be used, combining elements of RLHF and RLAIF, where the model is mostly correct and only needs a small amount of human feedback (around 50-100 examples) to align its prior with the desired outcome (2h4m57s).

- This hybrid approach differs from traditional RLHF, which typically involves training reward models with large amounts of data (2h5m29s).

Fields Medal for AI (2h5m34s)

- The comparison between generation and verification or generation and ranking in AI is discussed, with the intuition being that ranking is way easier than generation, similar to the concept that verifying a proof is much easier than actually proving it, which relates to the P vs NP problem (2h5m34s).

- The possibility of AI solving the P vs NP problem is mentioned, which would be a significant achievement, potentially worthy of a Fields Medal, raising the question of who would receive the credit (2h6m10s).

- The Fields Medal is considered a more likely achievement for AI than the Nobel Prize, with the possibility of AI making progress in isolated systems where verification is possible (2h6m37s).

- The path to achieving a Fields Medal in mathematics is considered unclear, with many hard open problems, such as the Riemann Hypothesis or the Birch and Swinnerton-Dyer Conjecture, which are difficult to solve and lack a clear path to a solution (2h7m31s).

- The idea that AI might achieve a Fields Medal before achieving Artificial General Intelligence (AGI) is proposed, with the possibility of AI making progress in specific areas of mathematics (2h7m55s).

- A potential timeline for achieving a Fields Medal is discussed, with 2030 being mentioned as a possible target, although it feels like a long time given the rapid progress being made in AI research (2h8m8s).

Scaling laws (2h8m17s)

- The concept of scaling laws in AI development is discussed, with the original paper by Open AI being slightly incorrect due to issues with learning rate schedules, which was later corrected by the Chinchilla paper (2h8m18s).

- The current state of scaling laws is that people are deviating from the optimal approach, instead optimizing for making the model work well within a given inference budget (2h8m53s).

- There are multiple dimensions to consider when looking at scaling laws, including compute, number of parameters, data, inference compute, and context length, with context length being particularly important for certain applications (2h9m0s).

- Some models, such as SSMs, may be more suitable for certain tasks due to their ability to handle long context windows efficiently, despite being 10x more expensive to train (2h9m21s).

- The idea of "bigger is better" is still relevant, but there are limitations and other approaches, such as distillation, can be used to improve performance and reduce inference time (2h10m11s).

- Distillation involves training a smaller model on the output of a larger model, allowing for more efficient use of data and potentially overcoming the limitations of available data (2h11m8s).

- Knowledge distillation, as used in the Gemma model, involves training a smaller model to minimize the Kullback-Leibler divergence with the distribution of a larger model, resulting in a faster and smaller model (2h10m54s).

- Distillation can help to extract more signal from the data and partially alleviate the problem of limited data availability (2h11m27s).

- If given $1 trillion to spend on improving large models or paying for high-performance computing (HPC) resources, the first step would be to acquire all the information and parameters that define how the model is trained, as there are many secrets and details about training large models that are only privy to large labs (2h11m51s).

- To maximize "raw intelligence" in the next 5 years, one possible approach is to invest in getting as much compute as possible, such as buying GPUs, and then letting researchers find the optimal balance between big and small models (2h12m51s).

- However, it's unclear whether the main limitation is compute and money or other factors, such as ideas and engineering expertise (2h13m15s).

- Having a lot of compute can enable running many experiments, but it's also important to have good engineering and ideas to make progress (2h13m28s).

- Even with unlimited compute and data, progress is ultimately limited by the availability of skilled engineers who can make a difference (2h14m0s).

- Research involves a lot of hard engineering work, such as writing efficient code and optimizing performance, as illustrated by the example of the original Transformer paper (2h14m16s).

- Making it easier for researchers to implement and test their ideas, for example by reducing the cost and complexity of model development, could significantly speed up research (2h15m4s).

- If a clear path to improvement is identified, it's worth investing in, as it can lead to significant breakthroughs (2h15m27s).

- Large AI labs tend to focus on the "low-hanging fruit" first, which refers to ideas that can be scaled up to achieve significant improvements in performance, such as GP24.25, and continue to build upon them as long as they remain effective (2h15m31s).

- This approach is taken because it is easier to continue scaling up existing ideas rather than experimenting with new ones, especially when the current methods are still yielding results (2h15m52s).

- However, when significant resources, such as $10 trillion, are being spent, it may be necessary to reevaluate existing ideas and consider new ones to achieve further advancements in AI (2h16m7s).

- It is believed that new ideas are necessary to achieve true AI, and there are ways to test these ideas at smaller scales to gauge their potential effectiveness (2h16m14s).

- Despite this, large labs often struggle to dedicate their limited research and engineering talent to exploring new ideas when their current approaches are still showing promise (2h16m37s).

- The success of large labs can also make it difficult for them to divert resources away from their current strategies, even if it means potentially missing out on new and innovative ideas (2h16m55s).

The future of programming (2h17m6s)

- The future of programming is envisioned as a space where the programmer is in the driver's seat, emphasizing speed, agency, control, and the ability to modify and iterate quickly on what they're building (2h17m7s).

- This approach differs from the idea of talking to a computer and having it build software, which would require giving up control and specificity, making it harder to make important decisions (2h17m52s).

- The best engineering involves making tiny micro-decisions and trade-offs between speed, cost, and other factors, and humans should be in the driver's seat making these decisions (2h18m53s).

- As long as humans are designing software and specifying what they want to be built, they should be in control, dictating decisions and not just relying on AI (2h19m11s).

- One possible idea for the future of programming is being able to control the level of abstraction when viewing a codebase, editing pseudo code, and making changes at the formal programming level (2h19m31s).

- This approach would allow for productivity gains while maintaining control, and the ability to move up and down the abstraction stack (2h20m13s).

- The principles of control, speed, and human agency are considered essential, and while there are details to figure out, this approach is seen as a promising direction for the future of programming (2h20m23s).

- The fundamental skill of programming is expected to change, but this change is seen as an exciting opportunity rather than a threat, as it will allow programmers to focus on more creative and high-level tasks (2h20m59s).

- Programming has become more enjoyable over time, with less emphasis on boilerplate code and more focus on building things quickly and having individual control (2h21m27s).

- The future of programming is expected to be more fun, with skills changing to focus more on creativity, design, and high-level decision-making, and less on carefulness and boilerplate text editing (2h21m54s).

- AI tools are expected to play a larger role in programming, allowing developers to work more efficiently and focus on more complex tasks, such as design and decision-making (2h22m41s).

- One of the benefits of AI-assisted programming is the ability to iterate quickly and make changes without having to think everything through upfront, allowing for a more experimental and iterative approach (2h23m12s).

- The use of AI tools is expected to reduce the time and effort required for tasks such as code migration, allowing developers to focus on more complex and creative tasks (2h22m53s).

- The ability to generate boilerplate code using AI tools is seen as a major advantage, allowing developers to focus on more difficult and nuanced design decisions (2h23m56s).

- Large language models are expected to play a key role in the future of programming, enabling developers to work more efficiently and effectively (2h24m8s).

- The fear surrounding AI models in programming is that as they improve, humans will make fewer creative decisions, potentially leading to a future where natural language is the primary programming language (2h24m19s).

- For those interested in programming, it is suggested to learn JavaScript, as it is expected to become the dominant language, possibly alongside a little PHP (2h24m56s).

- The idea that only a specific type of person, often referred to as "geeks," can excel in programming is being challenged, as AI may expand the range of people who can program effectively (2h25m14s).

- The best programmers are often those who have a deep passion for programming, spending their free time coding and constantly seeking to improve their skills (2h25m39s).

- As AI models, such as the "super tab" or "cursor tab," become more advanced, they will allow programmers to inject intent and shape their creations more efficiently, effectively increasing the bandwidth of human-computer communication (2h26m55s).

- The goal of building a hybrid human-AI programmer, as outlined in the "Engineering Genius" manifesto, is to create an engineer who is an order of magnitude more effective than any single engineer, with effortless control over their code base and the ability to iterate at the speed of their judgment (2h27m41s).

- Researchers and engineers build software and models to invent at the edge of what's useful and what's possible, with their work already improving the lives of hundreds of thousands of programmers. (2h28m10s)

- Their goal is to make programming more fun. (2h28m21s)

- The conversation features Michael Swall, Arvid, and Aman. (2h28m30s)

- The podcast is supported by sponsors listed in the description. (2h28m34s)

- A random programming code quote from Reddit is shared: "Nothing is as permanent as a temporary solution that works." (2h28m45s)