Stanford Seminar - Improving Computational Efficiency for Powered Descent Guidance

22 Jun 2024 (over 1 year ago)

Introduction

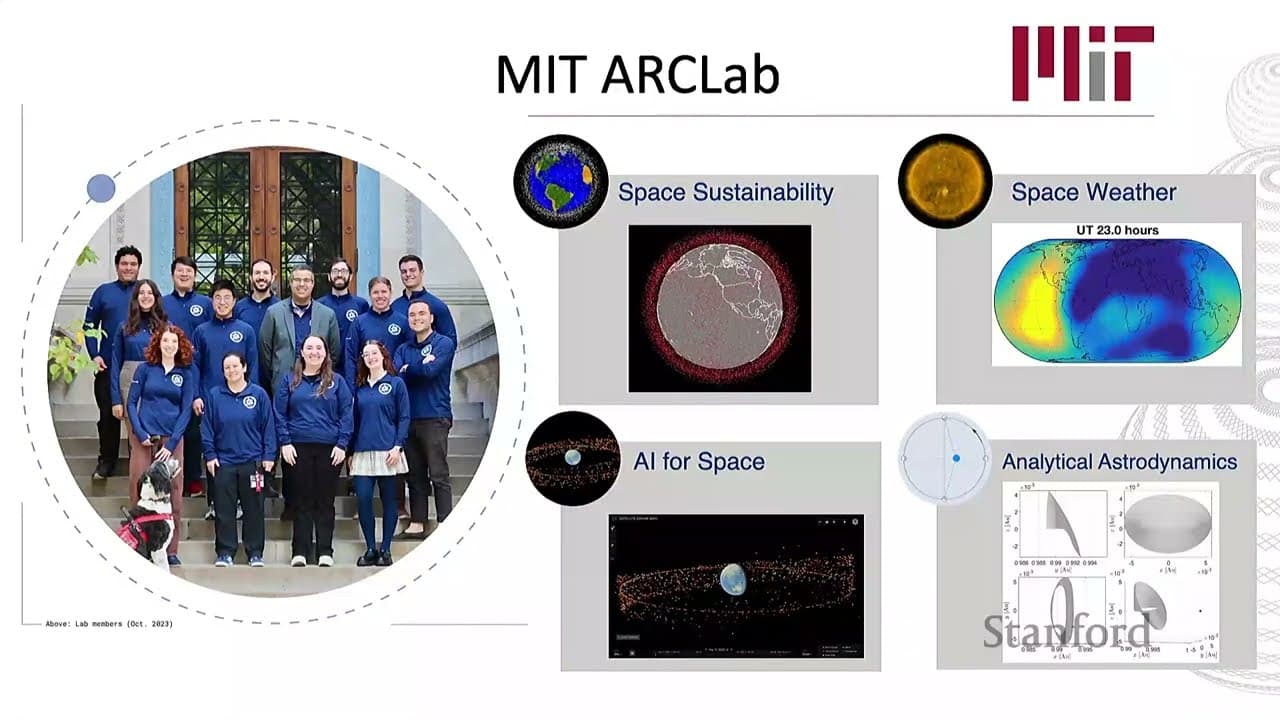

- The speaker introduces their research group at MIT's Ark Lab and their focus on AI for space, space sustainability, space weather, analytical astrodynamics, and entry, descent, and landing.

Challenges in Power Descent Guidance

- Current optimal control approaches for power descent guidance are not computationally efficient enough for complex space missions, especially those with high mass requirements like human exploration missions to Mars or the Moon.

- The key challenge in EDL is to achieve precise landing in the order of milliseconds with constrained hardware.

Previous Work in Deep Reinforcement Learning for EDL

- The speaker discusses previous work in deep reinforcement learning for entry, descent, and landing, including:

- Using neural networks to move from simplified 3 degrees of freedom solutions to complex 6 degrees of freedom solutions.

- Handling uncertain environments and unknown dynamics.

- Adaptive guidance solutions that can learn and adapt online.

Terrain-Relative Navigation

- The speaker highlights the importance of terrain-relative navigation for landing on planets without GPS and presents a reinforcement learning approach to analyze terrain information and determine safe landing locations.

Transformer-Based Power Descent Guidance

- The main topic of the talk is the use of Transformers to predict tight constraints for power descent guidance, with a link to the paper and GitHub code for the work.

- The motivation for this approach is to improve computational efficiency in handling complex constraint geometries, such as those encountered in Rover applications.

- Transformer-based neural networks are used for this mapping due to their ability to process the entire sequence in parallel and attend over the entire input sequence.

- The Transformer-based power descent guidance enables millisecond-level prediction of the optimal strategy, reducing the runtime of 3 degrees of freedom landing.

- The Transformer-based approach outperforms other methods in terms of computational time without sacrificing optimality.

- The Transformer-based approach reduces the optimization space by identifying active constraints, leading to faster optimization.

- The worst-case time complexity is still better than the original problem, even when all constraints are active.

- The Transformer inference time is negligible compared to the overall optimization time.

- The approach aligns with existing space hardware and does not demand more compute.

Ongoing Work and Future Improvements

- Collaboration with NASA's Johnson team is ongoing to discuss validation and approval of the algorithms.

- Weighted mechanisms for constraint satisfaction, considering the relevance of constraints, are suggested as a potential improvement.