Stanford EE274: Data Compression I 2023 I Lecture 6 - Arithmetic Coding

18 Apr 2024 (over 1 year ago)

Arithmetic Coding

- Arithmetic coding is a data compression technique that assigns shorter binary representations to more probable sequences.

- It treats the entire data as a single block, making it a streaming algorithm with linear complexity of O(n).

- The algorithm maps input sequences to a range on the number line and communicates that range to the decoder.

- Shorter intervals on the number line require more bits to describe, while longer intervals require fewer bits.

- More probable sequences correspond to larger intervals and hence shorter bit lengths.

- Arithmetic coding achieves an expected code length that is within two bits of the entropy of the source.

- Encoding and decoding with arithmetic coding are linear time processes.

- Unlike Huffman coding, arithmetic coding does not require a pre-constructed code book.

- Arithmetic coding can handle varying probabilities at each step.

- Precision issues can arise as the intervals become very small, which can be addressed by releasing common initial digits.

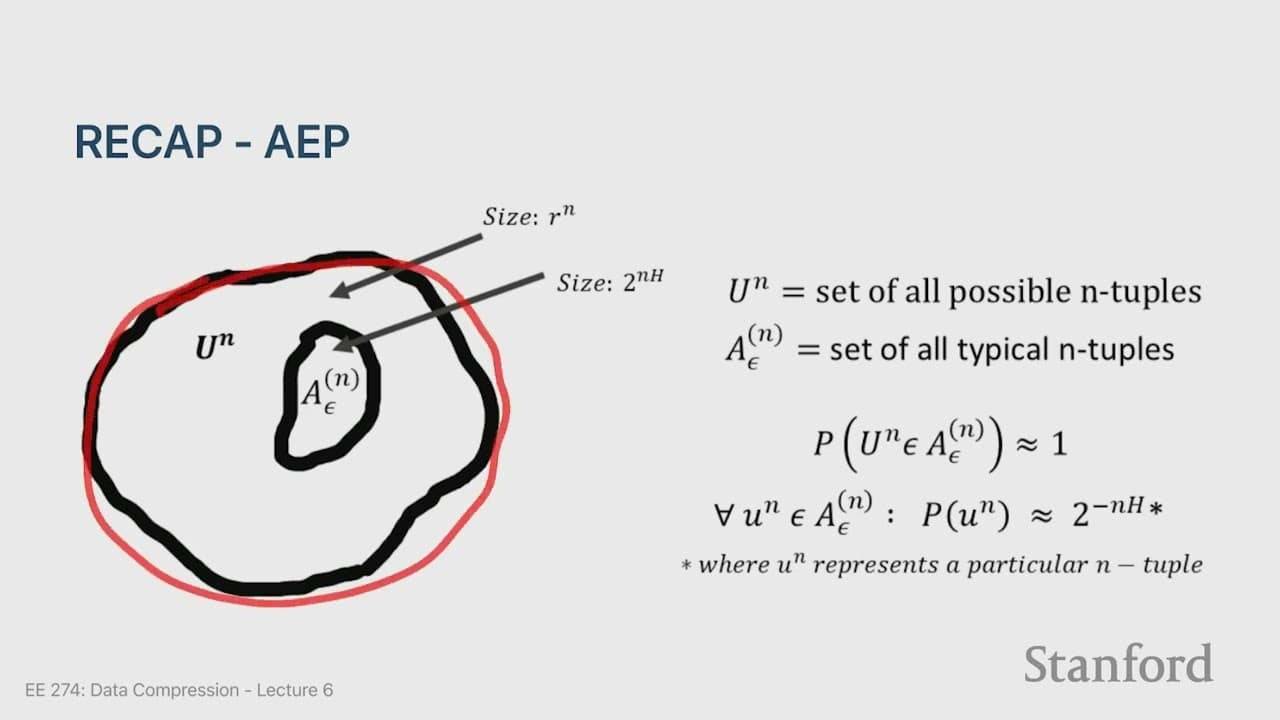

Comparison with Block Coding

- Block codes can achieve entropy as the block size increases, but the convergence rate of 1/B is not ideal.

- Exponential complexity in codebook size makes block codes impractical for large block sizes.

- The overhead of 1/B can be significant compared to the entropy, especially when the entropy is small.

Comparison with Huffman Coding

- Arithmetic coding is more efficient than Huffman coding in terms of compression, but it is slower due to the arithmetic operations involved.

Modern Codes

- Two modern codes, developed in the last 10-20 years, are TS and range coding, which offer faster decompression speeds while still achieving similar compression ratios to arithmetic coding.