Stanford CS25: V3 I Retrieval Augmented Language Models

25 Jan 2024 (almost 2 years ago)

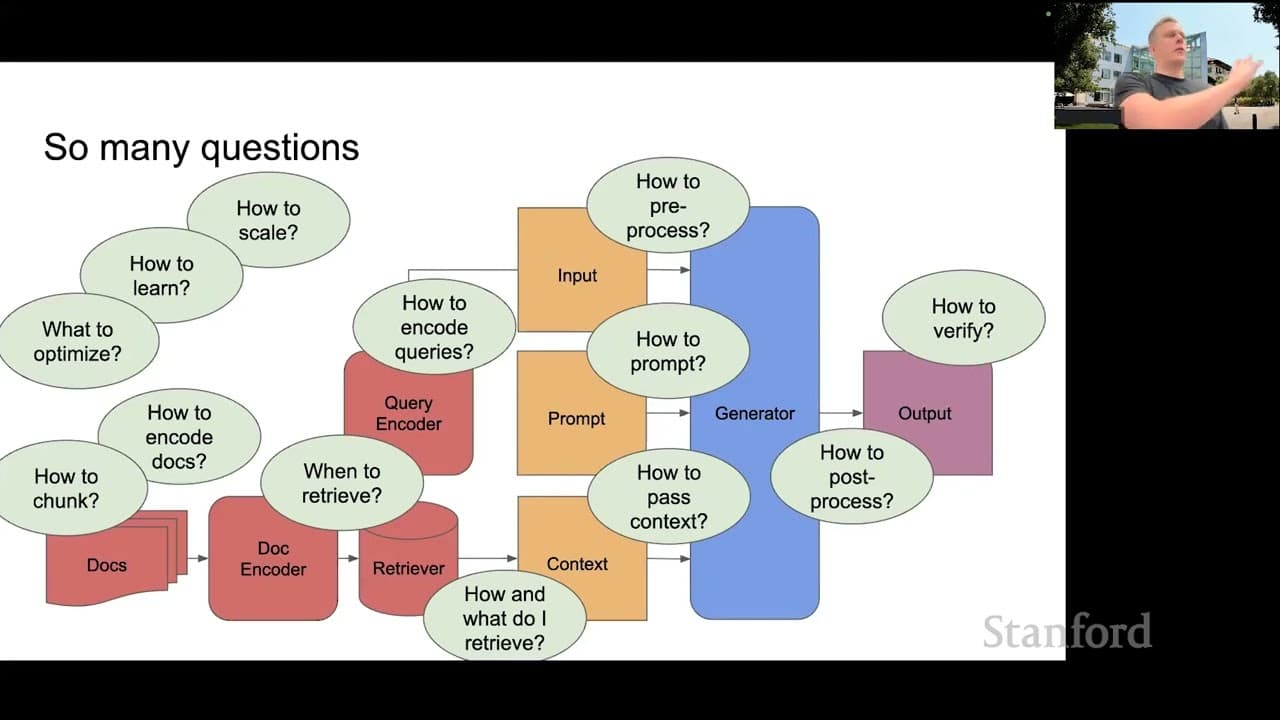

Retrieval Augmentation in Language Models

- Retrieval augmentation involves using an external memory (retriever) to retrieve relevant information and provide it as context to a language model (generator).

- Different variations of retrieval augmentation include updating the query encoder, updating both the query and document encoders, and using in-context retrieval.

- Instruction tuning and training the retriever and generator together are important in retrieval augmentation.

- Open questions and areas for future research include pre-training of retrieval augmented systems, scaling laws, and measuring the effectiveness of retrieval.

- There is potential for multimodal retrieval augmentation and the use of retrieval augmentation in other domains beyond text.

- Optimizing the entire retrieval augmentation system is emphasized over optimizing the language model alone.

Tuning Transformer architecture like convolution layers

- Can we optimize Transformer architecture similar to how we optimize convolution layers?

- Paper on light convolutions suggests the computational model is slightly better than the Transformer for GPU computation.

Two-stage process for retrieval

- Use bm25 as the first stage to cast a wide net for retrieval.

- Use a dense model as the second stage to narrow down the results.

Adapting models to domain-specific areas

- Two potential ways: instrumental tuning or meta-tuning.

- All approaches will likely come together in the end, with fine-tuning on the specific use case.

Hardware for efficient retrieval

- There are dedicated retrieval hardware solutions in development or already available.

- Efficient dense retrieval is a significant market.

Hallucination in language models

- Hallucination refers to when the language model produces output that does not correspond to the retrieved information.

- Often misinterpreted as a mistake or incorrectness, but it's more specific to counterfactual ground truth.

Defining ground truth and controlling hallucination

- Ground truth can be defined differently based on the index used.

- Architecture can be designed to control the level of hallucination and the reliance on the ground truth.

Tuning the temperature for sampling

- Temperature affects sampling by controlling how flat the distribution is.

- Even with a low temperature, random outputs can still occur.

- More sophisticated methods are needed to control sampling.